Application Run-time

General Description

This component provides the means to run and deploy Docker containers. It also provides several additional sub-components to give a useful minimum of core capabilities, eg a gateway, container registry, or service mesh. The components themselves are registered within the Application Run-time through the provision of manifest files which describe themselves and their offerings as well as the dependencies upon other components. The Application Run-time also comes with key components that are essential in allowing any of the ZDMP Assets (zComponents or zApps) to execute. These capabilities and the setup of the platform are managed by a web UI.

The Application Run-time uses a standard description of a component to allow it to manage all the components that run inside the platform. It uses a description of the resources themselves and what other resources they depend upon with the context of the platform. This meta data is stored in the docker labels and the Manifest files.

This Developer Tier components run externally to the platform. The Developer Tier is used to create platform conformant containers that can be generated and loaded onto the Marketplace. The platform can then install these containers from the Marketplace. These containers are then instantiated and thus can be accessed as a service. Note that many of the Developer Tier components such as the Orchestration Designer are by default on the Marketplace.

Individual ZDMP Assets within the system must expose a RESTful API to control and configure them. These control systems are registered with connections to the Services and Message Bus. This also allows data transfer through a message bus.

| Resource | Location |

|---|---|

| Source Code | Link |

| X Open API Spec | Link |

| Video | Link |

| Further Guidance | DNS configuration |

| Additional Links | Kubernetes Documentation |

| Online Documentation | Link |

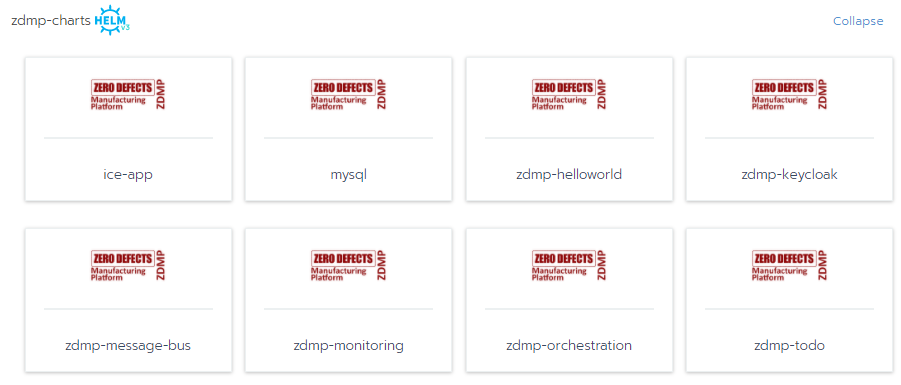

Screenshots

The following images are illustrative screen shots of the component.

Component Author(s)

| Company Name | ZDMP Acronym | Website | Logo |

|---|---|---|---|

| Information Catalyst for Enterprise | ICE | www.informationcatalyst.com |  |

Commercial Information

| Resource | Location |

|---|---|

| IPR Link | Application Run-Time |

| Marketplace Link | miniZDMP |

Architecture Diagram

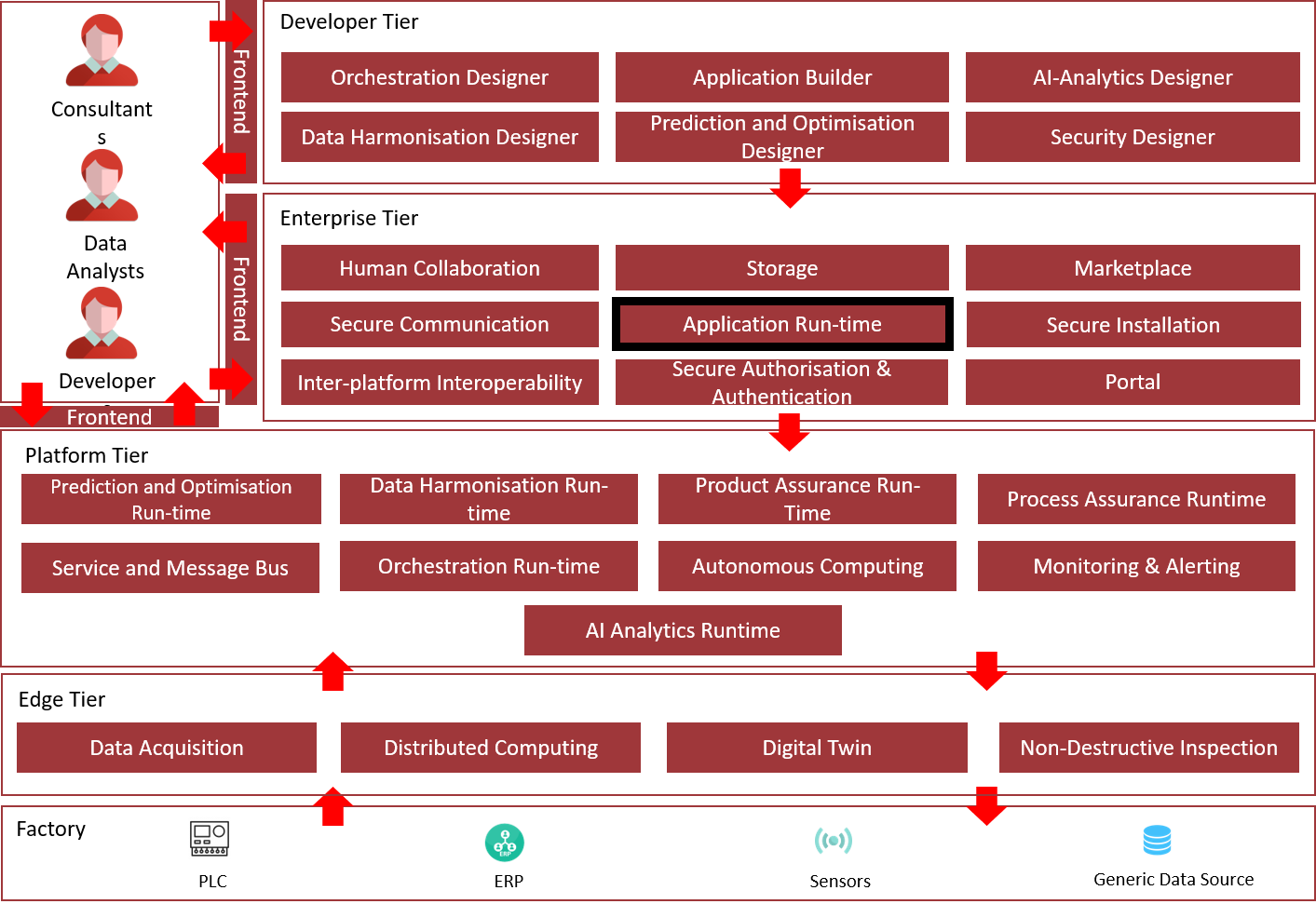

The following diagram shows the position of this component in the ZDMP architecture:

Figure 2: Position of Component in ZDMP Architecture

Benefits

Management and visibility of all components: One place to manage computing infrastructure and services

Extendable across cloud service: Allowing for versatile usage of cloud resources enabling computing to scale with a business

Cloud vendor agnostic: Allowing the choice of infrastructure provider, on-site, Amazon, Google, Microsoft, or alternative VPS (Virtual Private Server) providers

Scalability and versatility: Components and services can be scaled to meet user demands

Fully open-source solution so no vendor locking: No tie-in to a single company with benefits from community driven software which is backed by businesses to reduce the integration problems of software deployment

Support: Critical infrastructure can be managed and supported by technical experts in the field

Features

This component offers the following features:

Application Management

API

Expose Services

Monitoring

Cluster Management

CI/CD

Application Management

The use of customize charts makes it easy to repeatedly deploy applications. All these different applications are bundled in Helm Charts. All the collection of applications in a Helm chart structure are called Catalogs and can be stored in a gitlab / Github as a repository.

Helm Charts are collection of files that describe related Kubernetes resources. Such Helm Charts can deploy a simple application or something more complex like a full web app stack.

These Catalogs can be managed at different scopes. Global, Cluster or Project.

API

An API is provided that allows users to perform management operations using HTTP calls, for instance retrieve information about resources, perform actions such as deploying components, etc. These operations can also be performed via the UI.

In order to call the API, HTTP basic authentication is used by generating and including in the API call an API Key. This key may restrict access at cluster or project level, and can have an expiration date typically day, month, year or custom for minutes or hours.

Filtering can be performed on most of the collections provided by using HTTP query parameters. The API UI shows the appropriate request. Some collections can be sorted by using common fields using HTTP query parameters: sort={sort name} and order={asc or desc}.

Expose Services

With the use of services, the use of internal ports helps with the communication of components internally and the use of NodePort or Ingress allows publishing UIs and APIs to the data gateway.

hostPort exposes the container to the external network at <hostIP>:<hostPort>, where the IP address of the Kubernetes Node is the hostIP where the container is running and the hostPort is the port requested by the component.

By default, Kubernetes services are accessible at the clusterIP which is an internal IP address reachable from inside of the cluster only. To make it accessible from outside a NodePort service is required from a range 30000-32767. After the service is created, the kube-proxy that runs in all nodes, forwards all incoming traffic to the selected pods.

The Load Balancer can be used if a cloud provider is enabled in the configuration of the Kubernetes Cluster. It can provision a load balancer on AWS, Azure, CloudStack, GCE and OpenStack.

Ingress is deployed on the top of Kubernetes, and it is a load balancer managed by Kubernetes. This forwards the traffic straight to the selected pods which is more efficient.

Kubernetes ingress has been the service type of choice to expose the ZDMP components to the internet.

To this end, a nginx reverse proxy is deployed in the same network than the Kubernetes cluster. That means nginx is internal to the network but not internal to the Kubernetes cluster, ie not deployed to the Kubernetes cluster.

nginx is configured with specific mapping rules that allow mapping of a single URL to the multiple components deployed in the platform. This allows a reduction of management overhead there is no need to register new DNS names upon new component installation.

The nginx software listens to server name platform.zdmp.eu, then uses URI paths to select specific tenant and component. The server name is configurable via nginx configuration.

As an example, if access is needed to app1 in tenant1, the URL is: https://app1-tenant1.platform.zdmp.eu.

This address is transformed in nginx to an internal URL in the form of https://app1.tenant1.

Then this internal address is used as the host for the ingress resource.

The value for the tenant is zdmp in case of multi-tenant zApps, so if access is needed to the portal, the URL is https://portal-zdmp.platform.zdmp.eu.

Notice also that https is being used. Certificates are also provided for the external and the internal URLs. The external one at the nginx level will be CA signed and the internal ones are provided by the Secure Communication component.

Monitoring

Through the UI, administrators can examine all resources of a zApp; these include workloads containers, pods, services, volumes, ConfigMaps, resource usage etc. This information lets the administrator evaluate the zApp’s performance and identify the bottlenecks and remove them with the purpose of improve overall performance.

This configuration can be directly edited from within the UI, which gives an extra way to deploy quickly and then extract the correct YAML for inline or offline editing.

Logs can be accessible for every pod in the cluster to monitor any failures and it provides the option to download them for further support.

Cluster Management

With the use of Ansible scripts the deployment of a cluster can be performed with a few steps. Application runtime allows to centralize the management of different clusters whether these are on bare metal, public and private clouds such as EKS, GKE AKS. Switching between clusters and projects directly from the UI, facilitate cluster provisioning, upgrades, user management, and enforce security policies.

With the use of helm charts Catalogs, administrators can simultaneously install and upgrade zApps in multiple clusters and projects.

The use of miniZDMP facilitate the deployment on premise of the components, simulating an InCloud environment and with the use of the instructions provided in the documentation, the users can convert their applications from Docker-compose to the application runtime environment.

CI/CD

To use Continuous Deployment Jenkins Is used to deploy on Kubernetes environments. With a unique instance of CI/CD the structure of Helm charts can be checked to ensure they do not contain errors that can affect the Catalog. Also, it validates yaml files and resources such as Persistent Volumes (PV), Ingress, cluster roles, among others. Once the chart has been checked, it is installed on a temporary test environment to check health of the endpoints and then uninstalled. After a successful test, the chart is pushed to the Catalog where the CD will proceed to install it in Production.

System Requirements

Platform Infrastructure minimal requirements needed:

OS Ubuntu or Centos

RKE Kubernetes cluster for Applications formed by at least 2/10 machines, with at least the following specifications:

Master machine (1/3) with 2 CPU, 4GB RAM, and 100GB of disk

Worker machine (1/5) with 8 CPU, 16GB RAM, and 256GB of disk

K3s or RKE cluster for Rancher cluster:

Rancher (1/3): 8 CPU, 16 Gb RAM, and 256 Gb

Rancher v2.4.8

DNS Server if not provided:

DNS Server (1/2) with 2 CPU, 4GB RAM, and 50GB of disk

OnPremise minimal requirements:

Provision 1 or 2 Linux VMs (Ubuntu 18.04) depending on whether the edge node is going to be run in a separate node (recommended)

Resources:

Kubernetes Node

Minimum: 2 CPU cores, 4 GB RAM and 10 GB disk capacity.

Recommended: 4 CPU cores, 8 GB RAM and 50 GB disk capacity.

Edge Node (optional)

Minimum: 1 CPU core, 1 GB RAM

Recommended: 1 CPU core, 2 GB RAM

These numbers may change since a number of ZDMP components will be deployed, check specific requirements for specific components.

Software Requirements:

Docker

Docker-compose

Helm

RKE

K3s

Kubectl

Cert-manager

Nginx Ingress Controller

Bind9 DNS docker (edgenode)

Nginx docker (edgenode)

Web Browser

Software Requirements for testing:

K3s

Helm

Kompose

Docker

Docker-compose

Web Browser

Rancher

Gitlab account

Associated ZDMP services

Required

T6.4 Services and Message Bus

T5.2 Secure Communication

T5.2 Secure Authentication

T5.2 Secure Installation

T6.2 MarketplaceT6.4 Portal

Optional

Installation

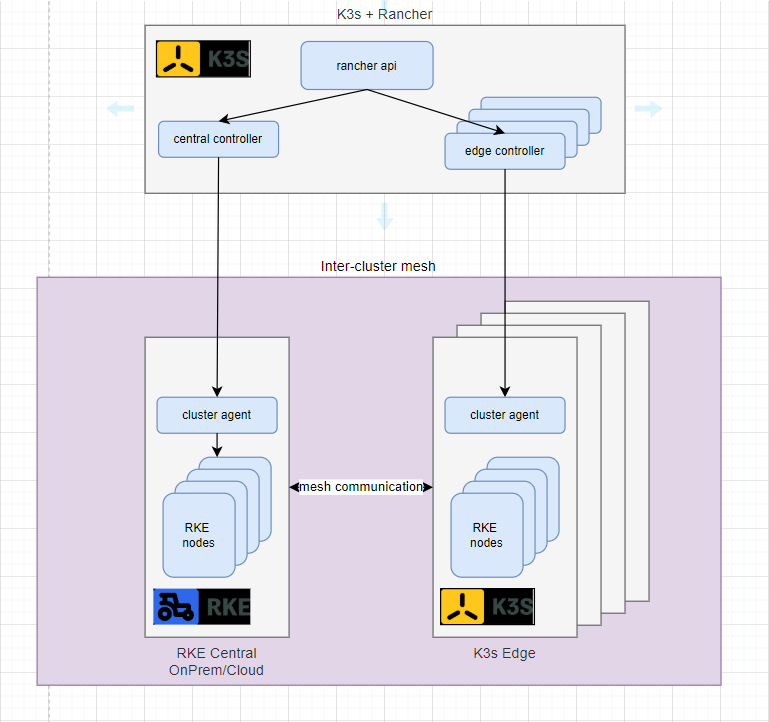

To install the platform two different approaches have been developed. The original one which was used to deploy the current ZDMP cloud platform and is based on RKE+Rancher Kubernetes and Ansible to install the platform. The miniZDMP, based on K3s+Rancher, mainly developed for developer partners and open call partners in order to run a lightweight version of the ZDMP platform an deploy and integrate their own zApps in the same way they would do in the ZDMP Cloud platform. Still under consideration but most likely miniZDMP will be the selected method for OnPremise deployment of the platform.

Cloud Platform

The following document describes the installation steps to deploy the ICE Integration Platform based on Rancher and Kubernetes.

The basic architecture for the platform is to deploy a dedicated Kubernetes cluster for Rancher which can be based on K3S or RKE.

In general, Rancher will be deployed on top of a K3S cluster.

Then a RKE based Kubernetes cluster will be deployed for the app deployments.

Finally, in certain use cases, it might be useful to deploy edge clusters, using K3S, for edge applications.

The app and edge clusters will be managed from the Rancher cluster.

This is a sample architecture diagram for the platform. Keep in mind that the Rancher cluster could be based on RKE, and there could be from 0 to n RKE or K3S clusters.

These are the components of the Integration Platform:

Docker

Kubernetes (RKE & K3S)

Rancher

CertManager (certificates management)

Istio (service mesh)

In order to make it easy to deploy the Integration Platform, Ansible is going to be used.

Some playbooks have been developed to install the platform:

install-user.yaml: this playbook creates the rancher user for the installation and distributes the ssh keys

install-docker.yaml: this playbook creates the basics for the platform. Performs some pre-reqs and installs Docker

install-rke.yaml: this playbook prepares the nodes with the prerequisites and installs the Kubernetes cluster (RKE). In addition, the playbook installs and configures kubectl and helm

install-certmanagerk8s.yaml: this playbook deploys Certmanager in the Kubernetes cluster.

install-rancherk8s.yaml: this playbook deploys Rancher in the Kubernetes cluster

install-k3s.yaml: this playbook deploys a K3s cluster

Presented below an installation overview of the cloud platform:

- Install Ansible: First you have to install ansible and set up the initial account using install-user.yaml playbook.

To install this first playbook you need to be root. The remaining playbooks will be run as rancher. You could also install this playbook using a different user with sudo privileges without password. If you need to do so you have to modify the playbook with the following at the beginning of the playbook instead of remote_user: root:

remote_user: username

become: yes

become_user: root

become_method: sudo

- Install User: Once Ansible is installed, run as root install-user.yaml playbook which will create the “rancher” user and perform some pre-requisites installations.

Now the rancher user has been created, the remaining playbooks will be run as rancher

Install Docker: We have to install Docker, so run the playbook install-docker.yaml which will perform some pre-reqs and install Docker

Install K3s+Rancher: Then we will deploy the Rancher cluster using K3s Kubernetes distribution using the install-k3s.yaml playbook, and then the Certmanager and Rancher on this cluster, using install-certmanagerk8s.yaml and install-rancherk8s.yaml playbooks

Install RKE: Once the Rancher K3s cluster has been installed and Certmanager and Rancher have been deployed, it is time to install the application Kubernetes RKE cluster with install-rke.yaml playbook

Notice that the Rancher cluster could also be an RKE cluster that would be installed as a regular RKE cluster.

The K3s cluster can also be deployed as an edge cluster for edge applications. It will be installed using the same playbook, but Certmanager and Rancher will not be deployed in this case to this cluster. If an edge cluster is going to be installed, there is no need to run install-docker.yaml.

In order to manage the application cluster and/or the edge clusters from the Rancher cluster, they have to be discovered using the Rancher UI.

miniZDMP

This is a tool to install a lightweight or mini version of ZDMP Kubernetes Platform. This was originally developed for developer partners and open call projects in order for them to deploy, test and integrate their zApps in the same way they would do on the cloud platform.

The tool is going to be the ZDMP on-premise version of the platform replacing the RKE version.

Some features of the latest release:

Rancher URL has been set in this version to fixed rancher.zdmp. This is to avoid using the same domain for rancher and the applications

Ingress self-signed certificate is now creating using cert-manager instead of openssl, and domain has been set to *.zdmp.home

HTTP Support

Edge Node : to be deployed on the same kubernetes node or an additional node (recommended)

DNS Server with preset domain to *.zdmp.home

Nginx Server as Load Balancer

Removed k3s traefik load balancer to run edge node in the same k3s node (still recommended to use a separate node)

Pre-requisite checks (ie OS version)

Removed command line checks: miniZDMP is now installed with auto checks

Configuration file to install or re-install specific components

The requirements in order to install minidump have been specified in the 2.8 System Requirements section.

The miniZDMP installs these software utilities and specific tested compatible versions:

Docker

K3s (Kubernetes)

Helm

Cert-manager

Rancher

Creates a self-signed certificate to use by the ingress controller

Nginx Ingress Controller

bind9 DNS docker (edgenode)

nginx docker (edgenode)

Once this is setup, the ZDMP app catalog can be registered, and apps can be deployed to miniZDMP.

Here is presented an installation overview of the miniZDMP tool. The detailed steps can be found in the Marketplace miniZDMP asset:

Clone the t6.4-application-run-time repo

Deploy the edgenode DNS server if there is not a DNS server in place. If there is one, skip this step

Install docker with the script installdocker.sh

Update the DNS domain file if the zdmp.home domain is not used

Run the command run_dockerdns.sh as per instructions

Update the resolv.conf file with script static_resolv.sh (or alternatives - see instructions)

Run ‘echo “docker” >> minizdmp/minizdmp.cfg since docker has already been installed during edgenode deployment

Deploy minizdmp

Run the command minizdmp.sh ip as per instructions. This deploys the application-runtime platform

Deploy the edgenode nginx

Run the command run_nginx.sh

Access Rancher at https://rancher.zdmp (you may need to add the url to the /etc/hosts of your host machine if it is not using the minizdmp DNS)

Run the scripts patch_cluster.sh and patch_node.sh. If the edge node is in a different node than the k3s node, then edit the scripts and change the ip to the edge node one.

Access Rancher and register the catalog(s)

If http access is needed, run patch_nginx.sh

Deploy zdmp components from the Rancher UI (secure-auth and portal)

Any script required before deploying any component should be in the minizdmp/zComponents folder

Upon deployment of first component import the self-signed certificate in the trusted CA’s store

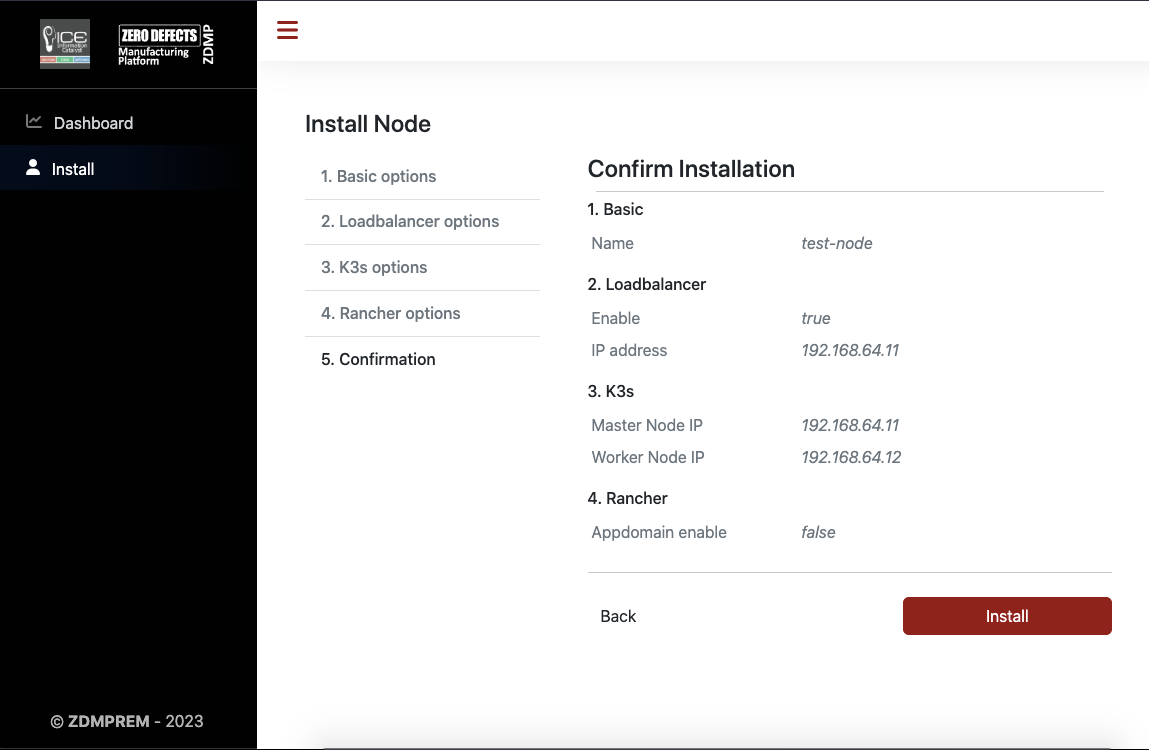

At the moment, a miniZDMP installer is being developed in order to install miniZDMP using a graphical interface. The installer is based on Angular for the frontend, Go for the API backend, Ansible running the installation process and Docker in order to package and distribute. This is just a preview with two snapshots, but the idea is to use this miniZDMP installer in order to configure the cluster according to user needs and run it. The user would run the docker image on a so-called installation node, configure the cluster nodes and some other configuration options, and then, the miniZDMP installer would install all required software.

Other Tools

Kompose Installation:

# Download

curl -L https://github.com/kubernetes/kompose/releases/download/v1.22.0/kompose-linux-amd64 -o kompose

# Permissions

chmod +x kompose

# Move file

sudo mv ./kompose /usr/local/bin/compose

Docker-compose Installation:

# Install docker-compose

sudo curl -L "https://github.com/docker/compose/releases/download/1.24.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

# Permissions

sudo chmod +x /usr/local/bin/docker-compose

How to use

This section shows how to use the Application Run-time Component for development testing, with the following sections:

From docker-compose to Application Run-time:

Convert docker-compose.yaml to helm charts and Kubernetes resources

Convert to helm charts

Configure Helm charts to use secrets

Test Helm charts

Expose Services

Clean up Helm charts

Helm Charts Structure

Dynamic Values

Test Helm Charts

Rancher Charts

Questions and zApp information

Upload charts to Repository

Inside Application Run-time:

API Application runtime:

Admin Global users

Organizations

Services

Add Catalog (Helm Charts location)

Installing an Application

For further information check the documentation.

Convert docker-compose.yaml to helm charts and Kubernetes resources

Convert to helm charts

# Run

kompose convert -f docker-compose.yml -c --replicas=1

# Create Helm chart

# Usage

helm create {APP-NAME}

# e.g.:

helm create zdmp-portal

Configure Helm charts to use secrets

# Generate Token to download ZDMP images.

# Copy registry-secret.yml in templates directory from ./resources/charts/templates/registry-secret.yml

# Edit label in registry-secret.yml with the name from _helpers.tpl. E.g.: “zdmp-portal.labesls”

# Add the following lines at the end of the _helpers.tpl file

{{- define "secret" }}

{{- printf "{\"auths\": {\"%s\": {\"auth\": \"%s\"}}}" .Values.privateRegistry.registryUrl (printf "%s:%s" .Values.privateRegistry.registryUser .Values.privateRegistry.registryPasswd | b64enc) | b64enc }}

{{- end }}

# Copy questions.yaml if it does not exist and amend with the information required by the app.

# Copy the following lines on deployments to replace imagePullSecrets, at the same level of containers. This line will inject the credentials in the deployment.

{{- if .Values.defaultSettings.registrySecret }}

imagePullSecrets:

- name: {{ .Values.defaultSettings.registrySecret }}

{{- end }}

# Add in values.yaml the following lines and change the SECRET-NAME with the name already provided in questions.

defaultSettings: registrySecret: "{ SECRET-NAME }"privateRegistry: registryUrl: zdmp-gitlab.ascora.eu:8443 registryUser: "" registryPasswd: ""

# Add namespace in all kubernetes files. At the same level of name.

metadata:

namespace: {{ .Release.Namespace }}

name: .....

Expose Services

# Expose application to host

# If expose to the host it can be use nodeport, otherwise install firefox and use the TCP port.

# NodePort: (default: 30000-32767)

# Add in services type: NodePort and nodePort: {NodePort-Number}

spec:

type: NodePort

ports:

- targetPort: 80

port: 80

nodePort: {NodePort-Number}

selector:

app: myapp

type: front-end

```YAML

# Expose application via ingress

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: {{ .Values.app.name }}-ingress

namespace: {{ .Release.Namespace }}

annotations:

# use the shared ingress-nginx

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/proxy-body-size: 100m

spec:

tls:

- hosts:

- {{ .Values.app.name }}.zdmp

secretName: {{ .Values.app.name }}-tls

rules:

- host: {{ .Values.app.name }}.zdmp

http:

paths:

- path: /

backend:

serviceName: {{ .Values.app.name }}

servicePort: 8080

Clean up kubernetes yaml files

Delete metadata values not used in all files, for further information see documentation.

Helm Charts Structure

Dynamic Values

# Add values.yaml using this structure

service1:

image:

repository: repository_location

imagename: image_name

tag: tag_version

imagePullPolicy: IfNotPresent

service:

name: Name_of_Service1

replicas: NumberOfReplicas

config:

server_port: "PORT_Number"

...

service2:

image:

# Change Variables in kubernetes files for { Values. }

# e.g.

namespace: {{ .Release.Namespace }}

replicas: {{ .Values.service1.service.replicas }}

name: {{ .Values.service1.service.name }}-deployment

image: "{{ .Values.service1.image.repository }}/{{ .Values.service1.image.imagename }}:{{ .Values.service1.image.tag }}"

imagePullPolicy: {{ .Values.service1.image.imagePullPolicy }}

- containerPort: {{ .Values.service1.config.server_port }}

Test Helm Charts

# Copy chart in the k3s environment

helm template ./{APP-NAME}/{HELM-VERSION}/

# Install helm app

helm install { Component-Name } {Version-Path} --namespace { Namespace } --set defaultSettings.registrySecret={ SECRET-NAME },privateRegistry.registryUrl={ REPOSITORY-URL},privateRegistry.registryUser={ REPOSITORY-USERNAME },privateRegistry.registryPasswd={ Token-Or-Password },{ Variable5 }={ Value5 },{ Variable6 }={ Value6 }

# Status

helm status { Component-Name } -n { Namespace}

kubectl get all,pv,pvc,secrets -n { Namespace}

# Uninstall helm chart

helm uninstall { Component-Name } -n { Namespace}

Rancher Charts

Questions and zApp info.

Add rancher files:

app-readme.md: It provides descriptive text in the chart’s UI header

questions.yml: Form questions displayed within the Rancher UI. It simplifies deployment of a chart, without it, it is necessary to configure the deployment using key value pairs

README.md: This text displays in Detailed Descriptions. It contains:

Description of the application

Prerequisites or requirements to run the chart

Descriptions of options in values.yaml and default values

Information that may be relevant to the installation or configuration of the chart

For further information see documentation.

Upload charts to Repository

Make sure you clone/pull the latest changes from the repository.

- zComponents catalog

url: https://gitlab-zdmp.platform.zdmp.eu/zdmp-catalog/zdmp-components.git

- zApps catalog

url: https://gitlab-zdmp.platform.zdmp.eu/zdmp-catalog/zapps.git

- external zApps catalog (open calls)

url: https://gitlab-zdmp.platform.zdmp.eu/zdmp-catalog/external-zapps.git

All the component Charts will be stored inside the chart folder.

# Status

git status

# Add files

git add .

# Commit message

git commit -m "Added Chart Component {Name} *****"

# Push Changes

git push

API Application runtime

Further information about the API can be found in this link.

Admin Global users

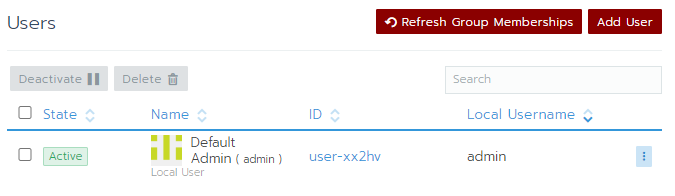

To use the application runtime by system administrators, the list of users of group need to be added in the application run-time in the global section. Users need to be added:

- Using the Web UI: Menu Global – Security – Add Users or Groups

- Using API: To add a user use the following API call on the terminal with access to the cluster configuration

# PUT

https://{Application run-time URL}}/v3/keyCloakConfigs/keycloak

Organizations

To add a new organization a project needs to be created.

- Using the Web UI: Select Cluster – Projects/Namespaces – Add Project

- Using API: To add a Project use the following API call on the terminal with access to the cluster configuration.

# POST

https://{Application run-time URL}}/v3/project?_replace=true

# POST

https://{Application run-time URL}}/v3/projects/{clusterid}:{projectid}?action=setpodsecuritypolicytemplate

Services

Using the Application Runtime, users can install Apps using the UI in the project assigned to their organizations. A service NodePort is exposed per application backend and linked to the API gateway.

| Component | Protocol | Port |

|---|---|---|

| Rancher UI | TCP | 80 |

| NodePort | TCP/UDP | 30000-32767 |

| Rancher agent | TCP | 443 |

| SSH provisioning | TCP | 22 |

| Docker daemon TLS | TCP | 2376 |

| K8s API server | TCP | 6443 |

| Nginx | HTTP | 30080, 30443 |

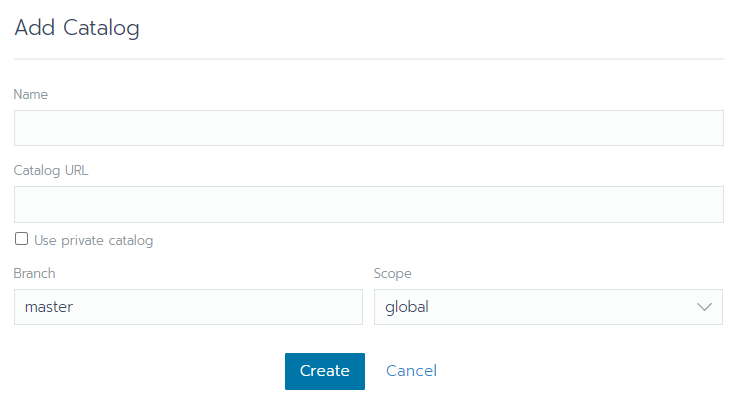

Add Catalog (Helm Charts) location

- Using the Web UI: After installation, the location of the helm charts needs to be imported from the menu Tools – Catalogs - Add Catalog

Name: Name of the catalog

Catalog URL: Repository location of the Helm chart.

- Using API: To add the repository use the following API call on the terminal with access to the cluster configuration.

# POST

https://{Application run-time URL}}/v3/projectcatalog

Installing an Application

- Using the Web UI: The application can be installed from the UI using the menu APPS – Launch

Using API: To install a zApp use the following API call:

# POST

https://{Application run-time URL}}/v3/projects/{clusterid}:{projectid}/app